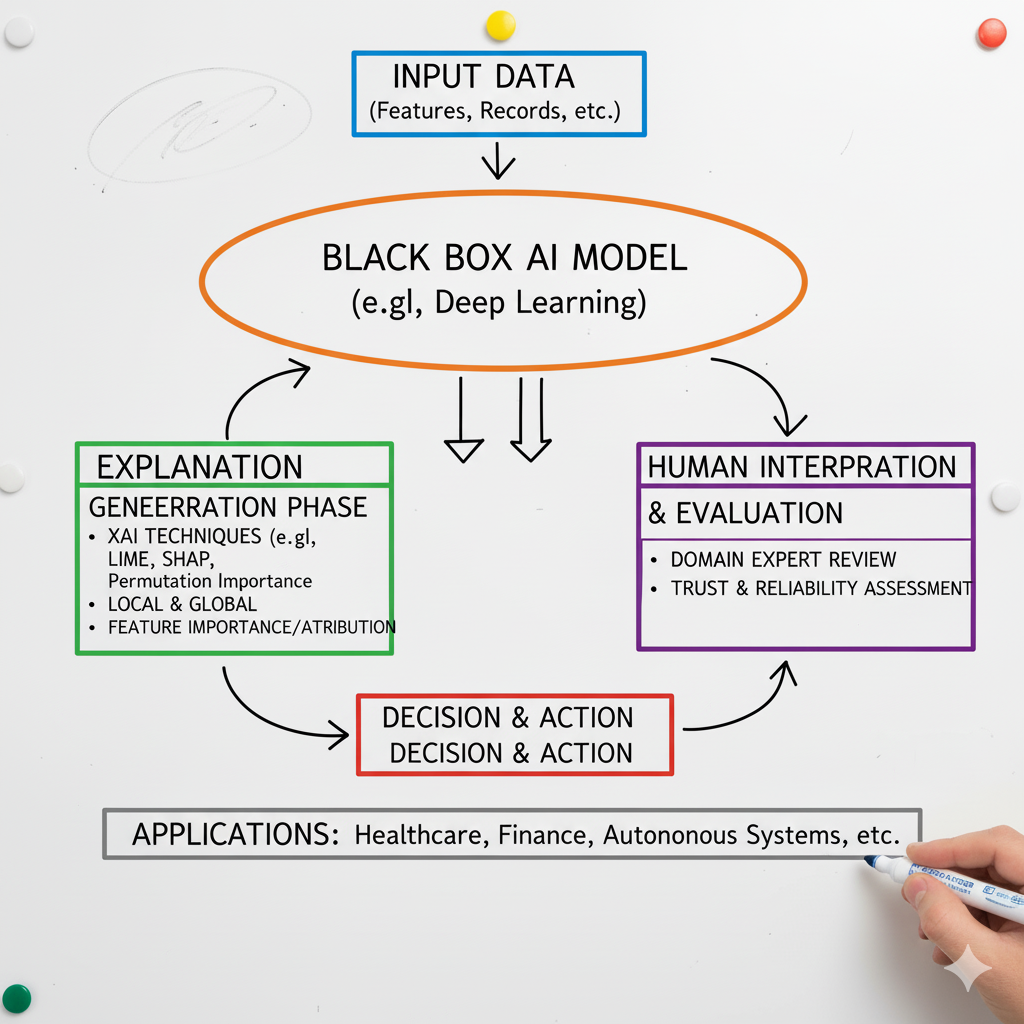

Artificial Intelligence (AI) is transforming industries, but often AI systems act as “black boxes” — they make decisions without showing how they arrived at them. Explainable Artificial Intelligence (XAI) is the solution. XAI is a branch of AI focused on making machine learning models transparent, interpretable, and understandable to humans.

In simple words: XAI lets you see the “why” behind AI decisions, making AI trustworthy and accountable.

Why Explainable AI Matters

- Trust: Users are more likely to adopt AI when they understand its decisions.

- Accountability: Businesses can identify errors or biases in AI models.

- Compliance: Regulations like GDPR require explanations for automated decisions.

- Better AI: Understanding model behavior helps improve performance.

Analogy: Think of AI like a chef. A regular AI just serves the dish, but XAI explains every ingredient and step used — now you know exactly what went into the meal.

Core Techniques of XAI

XAI uses several methods to make AI decisions interpretable:

1. Model-Specific Techniques

- Designed for specific types of models.

- Example: Decision trees and linear regression are naturally interpretable.

2. Model-Agnostic Techniques

- Can explain any AI model, even complex ones like deep neural networks.

- Popular methods include:

- LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions.

- SHAP (SHapley Additive exPlanations): Shows feature importance and contribution.

3. Visualization Tools

- Heatmaps, feature importance charts, and partial dependence plots help humans understand predictions visually.

Real-World Applications of XAI

- Healthcare: Explainable AI can clarify why an algorithm diagnosed a disease or suggested a treatment.

- Finance: Helps banks understand credit scoring decisions and prevent unfair bias.

- Autonomous Vehicles: XAI ensures car AI systems explain why they made certain driving choices.

- HR & Recruitment: Avoids bias in candidate selection by explaining AI decisions.

Challenges of Explainable AI

- Complex Models: Deep learning models are often hard to explain.

- Trade-off: Sometimes, improving explainability reduces accuracy.

- Standardization: No universal approach exists yet for all AI models.

Key Takeaways

- XAI transforms AI from a “black box” to a transparent and trustworthy system.

- Techniques like LIME and SHAP allow businesses and individuals to understand AI decisions.

- XAI is crucial in high-stakes industries like healthcare, finance, and autonomous systems.

- Despite challenges, explainable AI is the future of responsible AI deployment.

Human Tip: Always ask, “Can I explain why this AI made this decision?” If yes — you’re using XAI. If no — it might be time to reconsider its deployment.

Conclusion:

Explainable AI bridges the gap between machine intelligence and human understanding. By embracing XAI, we not only make AI smarter but also safer, fairer, and more trustworthy. Whether you’re a developer, business owner, or student, knowing XAI is essential for the AI-driven world ahead.